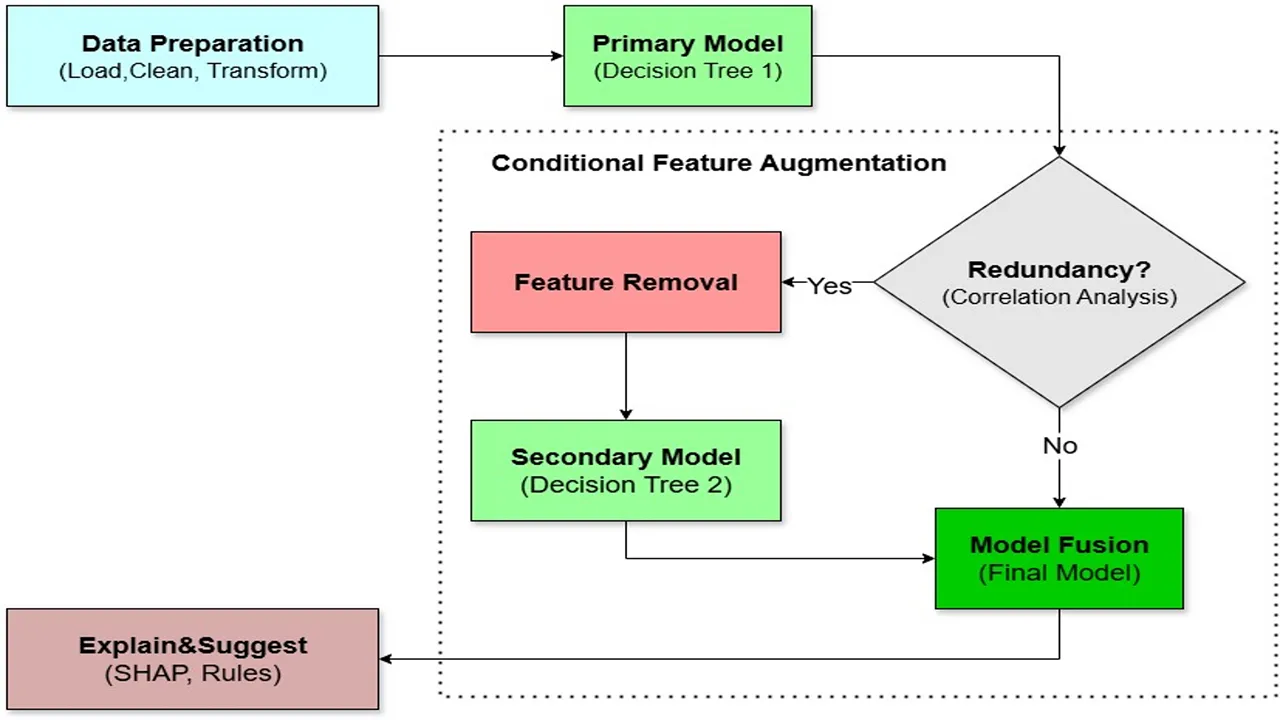

The scientific journal Scientific Reports (published by Nature) published an article on October 2, 2025, describing a new tool for the pre-screening of breast cancer tumor malignancy. A key feature of the presented model is its "explainability" (Explainable AI, XAI). Instead of being a "black box," the system, based on a decision tree ensemble, not only provides a prediction with ~92% accuracy but also visualizes the factors that led to that decision using the SHAP analysis method. This allows clinicians to see exactly which patient data parameters (e.g., cell nucleus texture or size) had the greatest influence on the result. Such a "transparent" approach is critically important for the adoption of AI in clinical practice, as it allows doctors not to blindly trust the machine, but to use it as a full-fledged assistant whose logic can be verified and understood.

Explainable AI Tool for Breast Cancer Pre-Screening Published