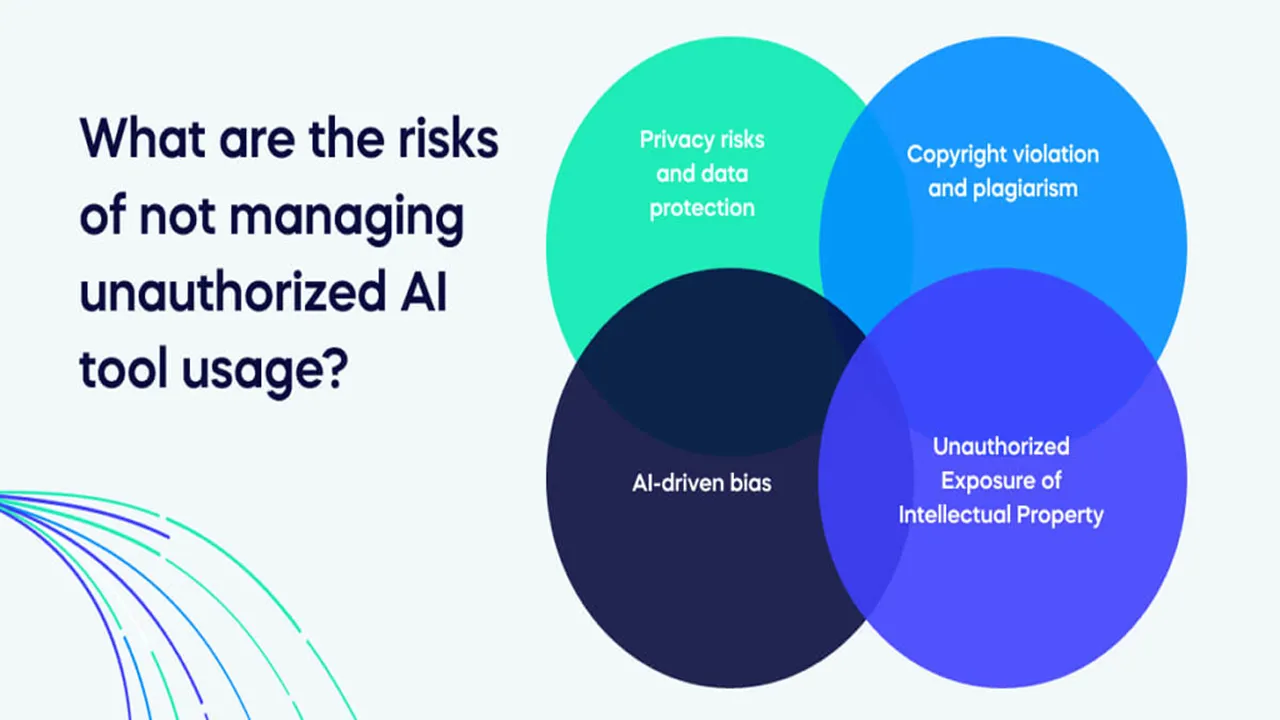

A BNN Bloomberg report from August 24, 2025, sheds light on a growing threat to corporate security: the use of unauthorized AI tools by employees, also known as Shadow AI. In an effort to boost their productivity, workers are increasingly turning to public generative AI services, pasting confidential information into them, such as code snippets, financial data, customer lists, and internal communications. This creates serious risks of intellectual property leakage and compliance violations, particularly with data protection laws like GDPR. Security experts interviewed for the report warn that data entered into public AI models can be used for training and may subsequently surface in responses to other users. The solution is not a total ban, but the development of a clear corporate policy: providing employees with safe, vetted enterprise versions of AI tools, and conducting mandatory training on safe use practices and the unacceptability of sharing confidential data with external services.

Report: Employees Using Unauthorized AI Put Businesses at Risk