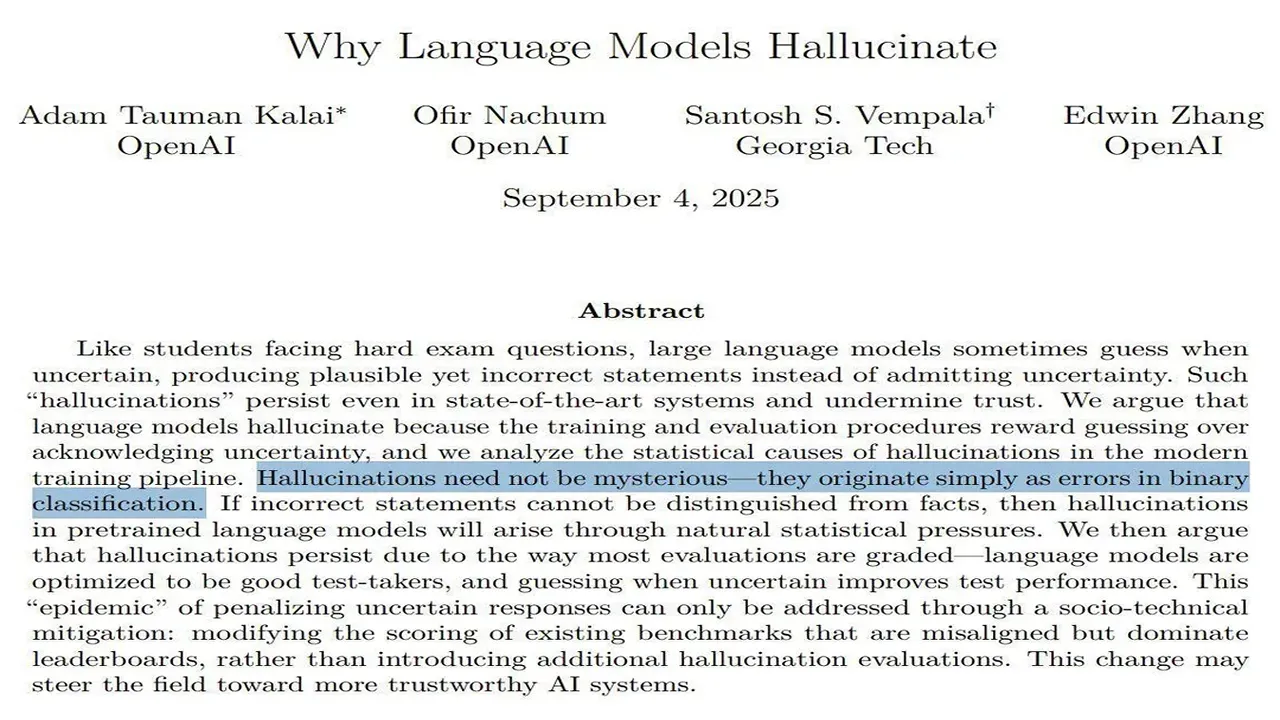

OpenAI has published research explaining the fundamental reason for hallucinations in large language models (LLMs). According to the paper published on arXiv, the problem lies not so much in the models architecture but in the methods used to train and evaluate them. Current benchmarks and evaluation systems are designed in such a way that they encourage the model to provide any plausible answer rather than admit it doesnt know. Like a student taking an exam with no penalty for wrong answers, it is statistically more advantageous for an LLM to "guess" and potentially score a point than to say "I dont know" and guarantee a zero. Thus, the existing training and testing paradigm systematically penalizes the expression of uncertainty and rewards confident but potentially false answers. OpenAI calls for "socio-technical" changes: a revision of popular benchmarks and the implementation of metrics that value not only accuracy but also "calibrated uncertainty"—the models ability to honestly assess its own knowledge.

OpenAI Research: Models Hallucinate Because They Are Rewarded for "Guessing"